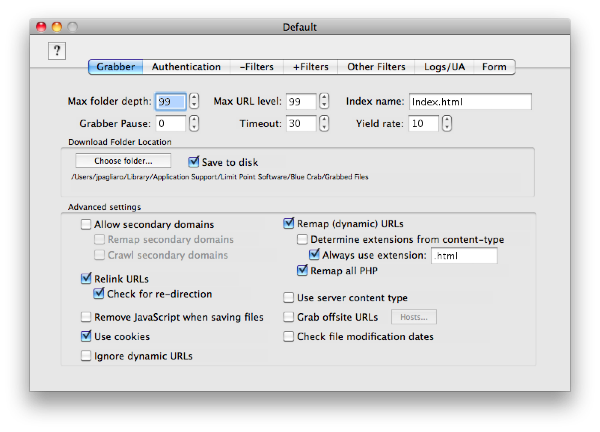

- Max Folder depth

The maximum number of path components (i.e. folders) in a URL for it to be processed. For example, if this value is set to 1 then the following URL will be bypassed

http://www.limit-point.com/BlueCrab/Images/BlueCrab.jpg

because it s path contains two folders: BlueCrab and Images.

- Max URL level

Blue Crab crawls a website in layers. It starts at a given URL, and this is level 0. Then Blue Crab extracts all URL's contained in the starting URL (i.e. crawls the starting URL), all of which comprise level 1. As Blue Crab grabs each URL in level 1 it crawls those resources to create a collection of resources that comprise level 2.

Blue Crab continues this process until either the current level exceeds this parameter value or all levels have been exhausted and there are no URL's which have not been visited.

Based on experience with Blue Crab many websites are exhausted by the 7th level.

- Index

When Blue Crab grabs a URL that specifies a directory it must save that file by name. But the URL does not contain a name to use. This parameter specifies that name. For example, when Blue Crab grabs the URL

http://www.limit-point.com/

it will save the contents in the directory "www.limit-point.com" in a file called "Index.html" if that is the value of this parameter.

- Grabber pause

This value specifies the duration in seconds between grabs. Even though Blue Crab never opens more than one connection to the server at a time, it may be advisable to not grab URL's too fast in succession so that you don't burden the server.

- Timeout

This is the number of seconds Blue Crab will wait for a response from the server before it aborts the current connection and continue with the next URL.

- Yield rate

A good deal of processing is performed for each grab. This processing is executed in a separate task from the main program. In order that the program remain responsive Blue Crab must allocate time to (or yield to) the other tasks it is performing. This value measures the degree to which it does so.

The lower the value the more Blue Crab yields. The smallest value is 1, and that is the recommended value to use.

Note that the lower the value the longer it will take to grab a website.

- Download Folder Location

This is the folder into which Blue Crab will save the downloaded files. More accurately it is the location that Blue Crab will create the "host directory." Blue Crab then saves all files it downloads into this host directory. For example, if the starting URL is

http://www.limit-point.com/

then the host directory is named "www.limit-point.com."

- Advanced settings

This group of settings control how Blue Crab processes the downloaded files to improve the navigability of those files offline. Generally, the default values work just fine.

- Allow secondary domains

A secondary domain is one whose primary host is the same as the starting URL.For example, if the starting URL was

http://www.limit-point.com/

then

http://registration.limit-point.com/

would be considered a secondary domain because both have a primary host of "limit-point.com"

If you check this box then Blue Crab will crawl URL's in such secondary domains.

This means that in your download folder you will obtain more than one host directory, each corresponding to another secondary domain encountered. In this case there would be two such host directories, namely "www.limit-point.com" and "registration.limit-point.com"

In order that you can navigate between the two domains offline Blue Crab needs to edit the URL's in the downloaded documents. This process is called "remapping secondary domains", and you need you check the corresponding checkbox to have it performed.

- Relink URLs

The process of relinking is performed to fix URLs which are specified absolutely relative to the server, or were redirected for another URL.

- Rename dynamic URLs

Dynamic URL's are those URL's which were generated on the fly by the server (such as a CGI). Such URL's contain "path arguments" and/or "search arguments." For example, this is a dynamic URL:

http://www.limit-point.com/registered_users.iform?name=john%20doe

Such a URL contains data that was generated by a program on the server. If you turn on this option then Blue Crab will save the contents to a disk file whose name is generated in a manner that improves navigation.

If you turn on the sub-option "Determine extensions from content-type" then Blue Crab will use the MIME type for the content (as specified by the server) to set the proper suffix of this name.

Since asking the server for the type of the content implies more network activity it can cause the downloading to be slow. If you know before hand what the expected content type is, then you can simply turn on "Always use extension:" and set the extension to properly match the content type. For example, if you expect the content type to be "text/html" it makes sense to always just use the extension ".html."

- Remove JavaScript when saving files

Sometimes the JavaScript in the downloaded files will impede the navigability of the files offline. If this is excessively the case then you can have Blue Crab remove all JavaScript when it saves the file by turning this option on.

- Use cookies

An HTTP cookie (usually called simply a cookie) is a packet of information sent by a server to a World Wide Web browser and then sent back by the browser each time it accesses that server. If this checkbox is selected Blue Crab will send back any cookies it gets. This ususally results in more accurate downloads, but entails more processing.